Robots

A few of my old amateur robots.

iRAT

iRAT is a micromouse development kit developed at Thinklabs, a robotics startup in India. I worked on this project as an intern at Thinklabs in 2007 along with Anand Ramaswami of Thinklabs. iRAT is a modular micromouse development platform; it has both top-down and sidewall sensors so the user can choose any sensor approach he likes.

iRAT is based on the AVR ATmega32 RISC controller running at 16MIPS. It has two high-torque stepper motors, which can be over driven with the on-board current chopper circuitry. The board also has LEDs, buzzer, LCD, and UART port for easy debugging. A simulator along with some sample code has also been developed.

Indur

Indur was my entry at the international micromouse event at Techfest 2008, which is held every year at IIT Bombay. This was my first micromouse entry for a competition.

Indur means mouse in Bengali. This robot was developed rather very quickly, in just a month. As a result, I didn't have time to optimize the PID routines to acheive perfectly straight runs, and Indur didn't perform to my expectation. Despite this, Indur still made to the finals in Techfest 2008 micromouse event, and was among very few robots to reach the centre of the maze.

Robots at the IIIT Hyderabad Robotics Lab

During my time at Robotics Research Lab of IIIT Hyderabad, I worked on several (professional) robot platforms. I predominantly made use of the Pioneer P3DX and the new (at the time) P3AT robot. With this platform, we implemented and developed various vision algorithms to develop a mobile robot that can interact and assist people in a home/office environment. I also worked heavily with stereo and monocular cameras and SICK laser scanners mounted on the poineer robots. We also used these robots for a mobile robotics course at IIIT Hyderabad for which I was both a student and teaching assistant.

MERP

MERP is a mobile robot platform that I designed and developed as a testbed for robotics learning and research. The objective was to create a cheap, modular, easy to debug robot platform, both at the hardware and software level. With this platform, I implemented and developed various vision algorithms to create a mobile robot that can interact and assist people in a home/office environment. Some of the abilities of the robot are:

- Speech based interaction and command execution.

- Face detection and face/person tracking.

- Vision based person following.

- Ability to read text messages and symbols.

MousEye

This small robot can move around and scan the surface underneath it. The idea of building this robot came after coming across this article on mouse scanners. Optical Mice generally use tiny CCD sensors. The mouse I hacked has an ADNS-2610 chip. It has a 18x18 CCD matrix. It is programmable and gives it's output serially (SPI interface).

Though the images produced are not very visually appealing, it can be used like poor man's document scanner. I interfaced the ADNS-2610 with my PC parallel port and made a simple GUI to show the scanned picture. I also attached two small DC motors to the mouse, so you can just sit back in your chair and just click few buttons to move the 'MousEye' robot and scan all the parts of the document you need.

The sensor chips in the optical mice like the ADNS-2610 give displacement readings, and thus can be used as alternative to conventional wheel encoders.

Maze Solver

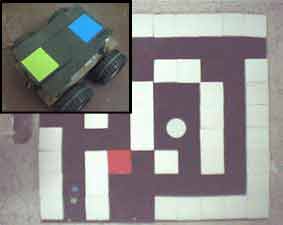

Maze Solver is an autonomous robot that attempts to solve a maze with the help of an overhead camera using image processing principles. I built this robot for a robotics competition at IIT Guwahati's annual techfest, Techniche'06.

The objective was to build a robot that could solve a maze in the shortest time possible by avoiding the color-coded obstacles (white unmovable, red: movable) with help of a overhead camera. The images from the overhead webcam were processed on a desktop computer, which then sent movement commands to the robot.

In solving the maze, the first task is to localize the robot in the arena using the overhead camera. A simple pattern on the robot is used to track it and find its orientation. Then it needs to differentiate between movable path and obstacles. Next it has to find the circular landmark and calculate the shortest route to it. Finally it drives the bot along this route to its target.

Apocalypse

Apocalypse is a line following robot; it also has obstacle detection sensors. It won 1st prize at 3 robot competitions. Apocalypse clocked the fastest time of 15 seconds at a line following event in Edge at Techno India, Kolkata. The 2nd fastest time at the event was 26 seconds. Apocalypse also won the Sliding Door event (a maze solving contest), crossing the whole grid in 22 seconds.

Apocalypse uses an Atmel AVR ATmega8 microcontroller as its "brain". L293D is used as motor driver for two geared dc motors. LDRs are used as the sensors. The sensor board is replaceable and it can use other sensors (such as IR LED + IR phototransistor) with ease. Apocalypse is highly configurable; we configured it according to our needs and it served as a formidable base for many events.